- Home

- About

- Services

Data Engineering

and AnalyticsIndustries

Platforms

Verticals

Solutions

- Resources

- Partner with Us

Azure Cloud Implementation

Azure Cloud Implementation

Cloud Managed Services Case Study

Client

UK based Government Entity

The Business Need

Based out of UK, a government entity which deals with statistical information had urgent requirement to build a data aggregation system to track the pricing variation products of categories. The workflow solution thus developed would enable the data analyst to get the daily track of pricing of the products across categories and get the insight out of that.

Challenges

The major challenge was to fully automate large-scale pricing tracking of the products across various categories and sub-categories. Extraction should be done daily and aggregation/validation needs to be done post that. We have to deliver very high-quality data from unstructured sources so building up the validation and data quality layer was very challenging in the automation process.

Please share your details to learn more about our case studies.

Our Solution

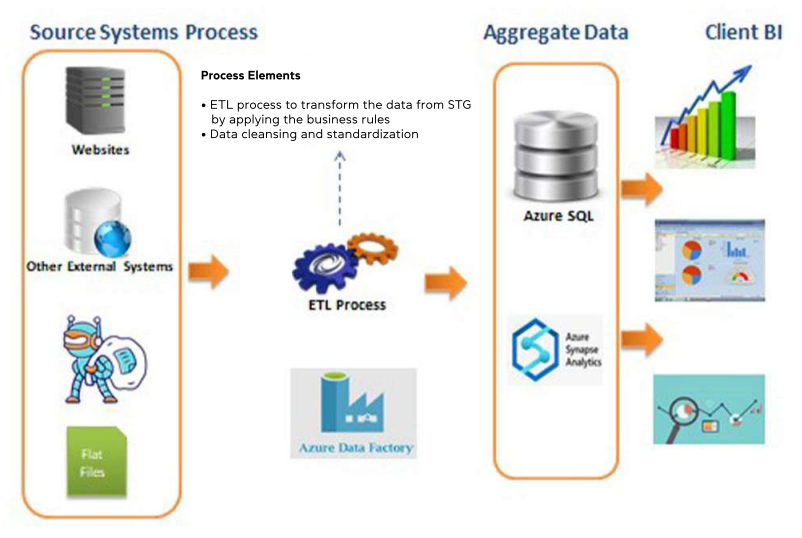

TechMobius developed a complete automated data aggregation system using Perl, Python, Azure data factory, and AzureSQL.Source websites list page URLs will be crawled as the first step using the bots developed in Perl/python daily schedule. The extracted output will be converted into flat files and placed in the Azure blob. We build Azuredatafactory pipelines to load the flat files from the blob to the AzureSQL database. Using store procedures in AzureSQL, input processing will be done and delta new input will be placed for product information collection.

Crawlbots will consume the product information collection input from the blob. Again product information extraction will happen and extracted output converted flat files will be placed to blob in a different path. Schedule pipelines would load those product information files to the database and proceed with standardization and postprocessing with the given set of rules. This approach is used for all the categories/subcategories and we have different pipelines/azure SQL based on the defined schema attributes. End of the month, daily collected output will be validated and sent for quality audit against client-defined percentages attribute levels. Based on the internal and quality audit review comments, the fix will be made to the data and finally delivered to the customer as flat files.

Highlights

The solution provided a category-wise dataset delivery across the various e-commerce sources in the UK region, thus improving operational efficiency on the client end. Increased data quality activities have enriched the data and can be readily consumed for analytics purposes at the client end.

For a detailed presentation of specific use cases, please write back to us at support@techmobius.com

The Business Need

Based out of UK, a government entity which deals with statistical information had urgent requirement to build a data aggregation system to track the pricing variation products of categories. The workflow solution thus developed would enable the data analyst to get the daily track of pricing of the products across categories and get the insight out of that.

Challenges

The major challenge was to fully automate large-scale pricing tracking of the products across various categories and sub-categories. Extraction should be done on daily basis and aggregation / validation need to be done post that. We have to deliver very high quality of data from the unstructured sources and so building up the validation and data quality layer was very challenging in the automation process.

The Mobius Solution

Top Challengers/ How Can We help/ Why us?

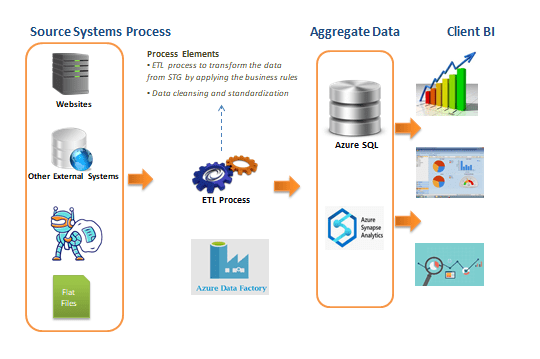

Mobius developed a complete automated data aggregation system using Perl, Python, Azure data factory and Azure SQL. Source websites list page URLs will be crawled as first step using the bots developed in Perl/python on daily schedule. Extracted output will be converted into flat files and placed in the Azure blob. We build Azure data factory pipelines to load the flat files from the blob to Azure SQL database. Using store procedures in Azure SQL, input processing will be done and delta new input will be placed for product information collection.

Crawl bots will consume the product information collection input from the blob. Again product information extraction will happen and extracted output converted flat files will be placed to blob again in different path. Schedule pipelines would load those product information files to the database and proceed with standardization and post processing with the given set of rules. This approach is used for all the categories / sub categories and we have different pipelines / azure SQL based on the defined schema attributes.

End of the month, daily collected output will be validated and sent for quality audit against client defined percentage at attribute levels. Based on the internal and quality audit review comments, fix will be made to the data and finally delivered to the customer as flat files.

Results

The solution provided a category wise data set delivery across the various e-commerce sources in UK region, thus improving operational efficiency in the client end. Increased data quality activities have enriched the data and can be readily consumed for analytics purpose at the client end.

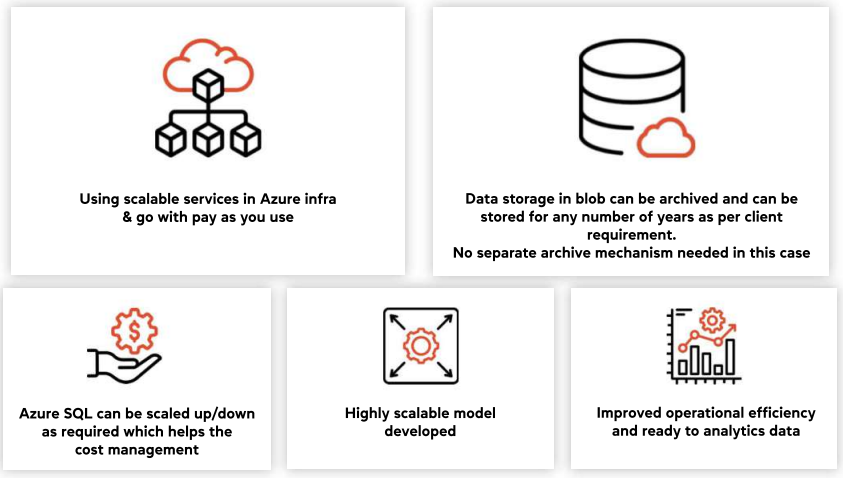

Using scalable services in Azure infra and go with pay as you use

Azure SQL can be scaled up/down as required which helps the cost management

Data storage in blob can be archived and can be stored for any number of years as per client requirement. No separate archive mechanism needed in this case

Highly scalable model developed

Improved operational efficiency and ready to analytics data delivered.

Related Case Studies

Price Monitoring Tool

Price Monitoring Tool Price monitoring tool DevOps Case Study Client A leading Australian…

Reporting Tool Solution

Reporting Tool Solution Reporting tool solution Data Consulting Case Study Client A Renowned…

Smart Product Classification

Smart Product Classification Smart Product Classification Website Development Case Study Client A leading…